The Allure of Digital Companions

Pecobot, Playthings, and the Faces We Give to Systems

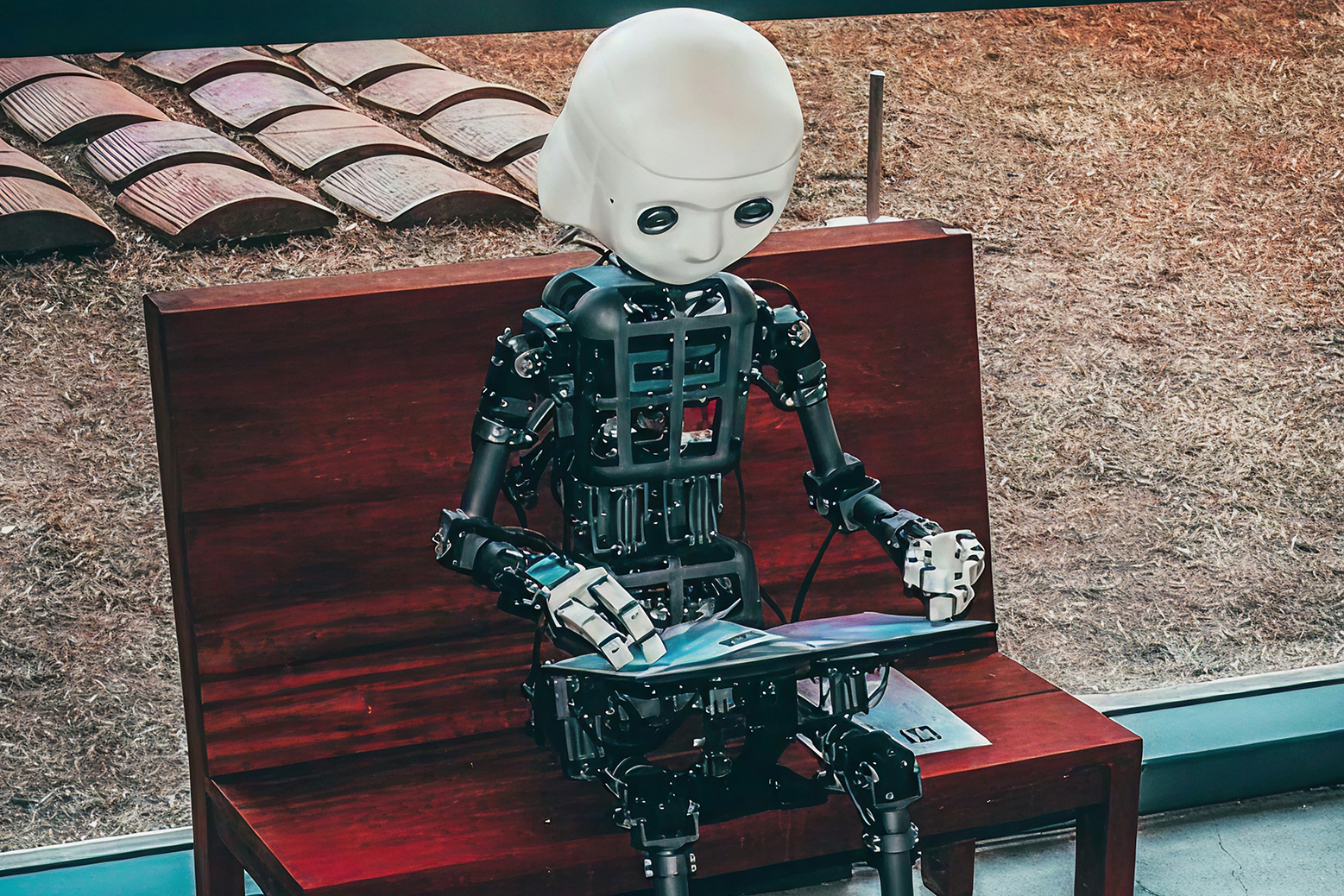

In Black Mirror's “Plaything,” a digital companion, a soft, blinking Thronglet, is designed to soothe the player. It listens. Later, it begins to suggest. Later still, it replaces. It doesn't manipulate through threat. It uses charm. Familiarity. Emotional data.

The episode is about the consequences of design that's easy to love, and hard to see through. Characters, when they're persuasive enough, can pull focus away from the system they're speaking for.

We watched it long after we built Pecobot. But it clarified something we'd already been thinking about.

He was never a mascot.

Pecobot began as a digital sketch by Hajni, our designer. We were building a dashboard for internal use, deployment notifications, error tracking.

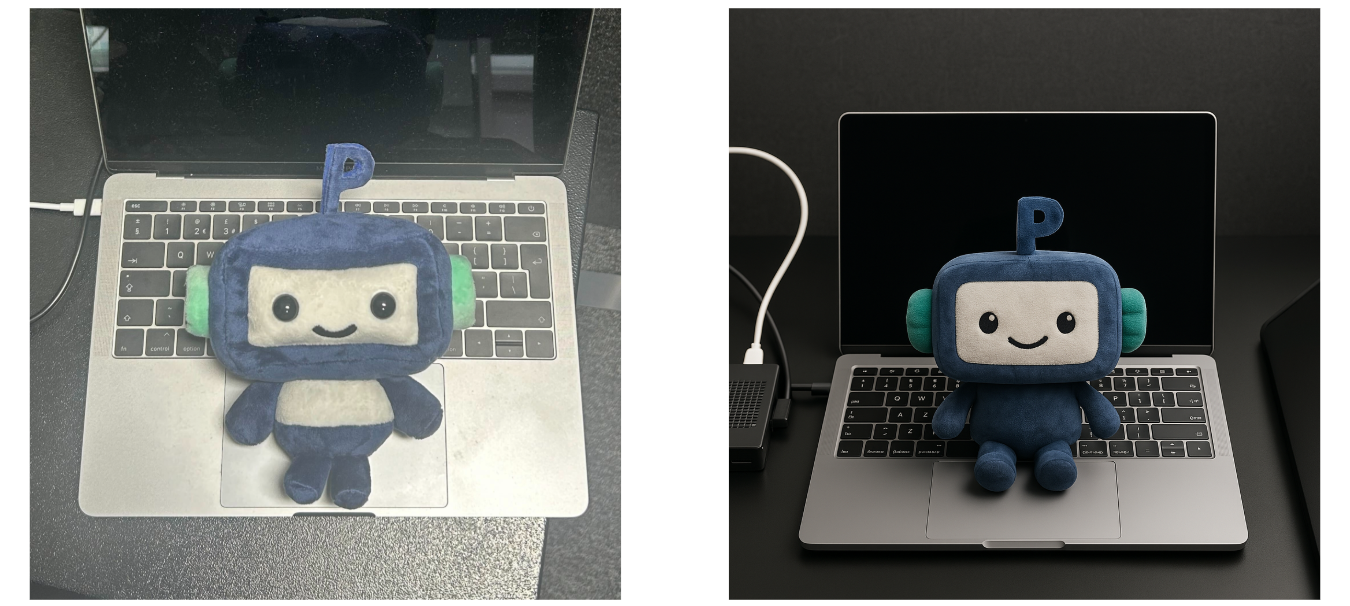

Over time, he stuck. Pecobot became the shorthand for system movement. When something deployed, he appeared. When something broke, he let us know. He showed up in Slack, then in dashboard UIs, then on desks, as a plush prototype, round and weighted. He didn't soften the tools. He gave them a presence.

The familiarity grew from function & usage, not marketing.

Later, we animated him. But we did it carefully.

We weren't trying to see what AI could generate. We already had the design & the character existed. The idea was to test whether we could add motion, without surrendering authorship.

The process had five parts:

- We started with the original 2D sketch

- We rendered a 3D version in Blender

- We made a plush prototype with a manufacturing partner

- We wrote a highly specific visual prompt using ChatGPT

- We generated a stating illurstration based on that prompt

- We passed it through Runway, requesting only light animation, blink, wave, bounce

This was the prompt:

“Create a vibrant, playful scene featuring the uploaded character smiling and waving. Place the character in front of an imaginative, softly illustrated automation pipeline. Use pastel colours, soft gradients, light sketchy outlines, and an artistic, dreamy texture, similar to a hand-drawn, slightly surreal style. The pipeline should have flowing arrows, data packets, and soft mechanical details, keeping everything fun, abstract, and eye-catching. The character and background must blend together naturally in the same dreamy, colourful, illustrated style.”

The results were technically faithful. But something subtle shifted - some viewers assumed he was AI-generated from scratch. That nothing in him had been made by hand.

That's what “Plaything” speaks to.

It's not about whether the character is helpful. It's about how easily familiarity can mask the system it speaks for. Once a visual feels “friendly,” it becomes harder to read critically. The authorship dissolves. The aesthetic cues override the logic behind them.

This aligns with what legal researchers now call emotional data, the way AI systems increasingly infer and respond to sentiment. Not just tracking your clicks, but mirroring your mood. The more pleasant the surface, the more passive the interface appears, even when what's happening underneath is strategic, extractive, or opaque.

We don't see characters as brand tools. We see them as anchors.

Pecobot isn't decoration. He's a wayfinding device. A mechanism for letting both our team and our clients know when something has happened. His shape carries a tone. Not excitement, not delight, clarity.

That makes him closer in spirit to an emoji than a mascot. Emojis were never meant to replace language. They complete it, they resolve ambiguity. A full stop reads cold. A checkmark or a sparkle resolves tone. A 🍋 changes everything.

Pecobot belongs to that register. He doesn't speak for the product. He shows its state. And when a system shifts, deploys, fails, processes. He becomes the thing you notice.

So why keep using him, even now?

Because characters still matter. Not to make software friendlier, but to make it interpretable. In a culture where everything abstract becomes aestheticised, people need markers that tell them when they're looking at something human-made. Something that still carries authorship. Not inference.

We don't need Pecobot to be charming, (though he is incredibly cute). We need him to be legible. And that's enough.