In the heart of Silicon Valley, A renowned AI startup, InnovAI, was on the brink of launching its revolutionary healthcare diagnostic tool. Months of relentless coding, training, and fine-tuning had culminated in a product that promised to detect diseases with unprecedented accuracy. Excitement buzzed through the office until one fateful night, a security breach exposed a critical vulnerability in their AI pipeline.

A malicious actor had injected adversarial examples, causing the AI to misdiagnose patients and shake the very foundation of InnovAI's credibility. This near-catastrophe underscored a harsh reality: Securing AI pipelines isn't just an option; it's an imperative.

This article explores the strategic approaches to securing AI pipelines through rigorous testing, ethical hacking, and robust protection mechanisms within a DevOps context.

Understanding the AI Pipeline in DevOps

An AI pipeline encompasses all the stages involved in developing, deploying and maintaining AI models. This includes data collection, preprocessing, model training, validation, deployment, and ongoing monitoring.

When incorporated into a DevOps pipeline, the AI workflow must align with the principles of continuous integration and continuous delivery (CI/CD). However, AI models present unique challenges: they are data-dependent, prone to bias, and highly sensitive to adversarial manipulation. These characteristics make them an attractive target for cyberattacks and a potential weak point in the software delivery lifecycle.

Testing AI Components: Going Beyond Traditional QA

Traditional software testing does not suffice when it comes to AI. Instead, teams must adopt a multi-faceted approach that includes:

- Data Quality Testing: Ensuring that input data is accurate, complete, and free from malicious manipulation.

- Model Validation: Regularly assessing model accuracy, precision, recall, and fairness metrics.

- Bias and Fairness Testing: Identifying unintentional bias in data or model output, which could lead to ethical and reputational issues.

- Security Testing: Verifying the resilience of the AI model against input-based attacks such as adversarial examples or model inversion.

Automation in these tests is essential for integrating AI testing into fast-paced DevOps cycles. Tools such as MLflow, Great Expectations and Seldon can help automate and standardise AI model testing.

Ethical Hacking for AI Systems

As traditional systems benefit from penetration testing, AI systems should undergo AI-specific ethical hacking. This includes:

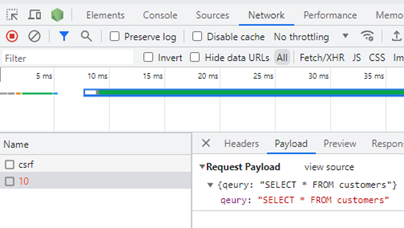

- Adversarial Testing: Injecting subtle but malicious modifications to input data to fool the AI model.

- Model Extraction Attacks: Attempting to reverse-engineer the model architecture or logic.

- Data Poisoning: Introducing corrupted or biased data during training to manipulate outcomes.

Simulating these attacks in a controlled environment helps DevOps and security teams identify vulnerabilities before malicious actors can exploit them.

Protecting AI in DevOps Workflows

Securing AI pipelines requires embedding security best practices throughout the DevOps process:

- Secure Data Handling: Apply encryption, access controls, and data lineage tracking.

- Model Governance: Implement version control, audit trails, and explainability for every model release.

- CI/CD for ML (MLOps): Use secure, repeatable CI/CD practices for model deployment.

- Monitoring and Alerts: Continuously monitor model performance and detect drift, anomalies, or unexpected behaviours.

Fostering a security culture within the DevOps team is crucial. By understanding the unique security challenges posed by AI, developers, data scientists, and security engineers can work together to build resilient and secure AI pipelines. Securing the AI pipeline is not just an IT concern; it's a business imperative.